A small comment by Microsoft’s Thamin Karim at the European AppV User Group event in Amsterdam last week caused me to re-think streaming in App-V 5. It was one of those “duh!” moments that occur when something so obvious hits you and you realize that you hadn’t really thought about it and were operating on wrong assumptions.

The comment, which in essence was that you get slightly better performance by not preparing the App-V package for streaming and using “fault streaming” [in certain situations] with App-V 5. Here are my thoughts on this.

Streaming and Performance

When stuff needs to be transmitted over networks, we use the term streaming to indicate a difference in transmission/consumption. Rather than transmitting the entirety of the stuff, and then operating on it, the operation portion is allowed to start prior to receiving the entirety.

Streaming in App-V is a major feature to allow apps to run without being present locally. The native operating system is built with an assumption that the entirety of a file is present locally on disk, or on a remote share and loaded fully into memory prior to enabling operations on it – meaning allowing the application to run as a process. Streaming is used in App-V both to improve performance (allowing the application to start sooner or using fewer local resources), and to save on local resources (by avoiding locally caching of things not really needed).

When an application starts without App-V, a detailed analysis of the start-up period of the application tends to bounce back and forth between CPU and Disk I/O. Initially, there is disk I/O to read the WinPE header, and other portions, of the exe. This is followed by some processing, then more I/O. And this bounding back and forth occurs for some time.

Side-note: App Pre-Fetch

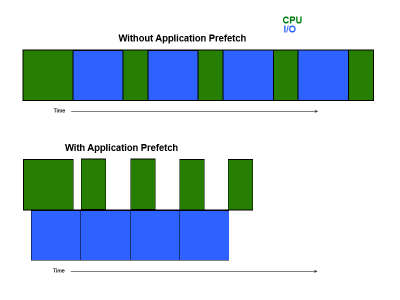

To explain this, let me start with an example using another Microsoft performance boosting technique — “App Pre-Fetch”. Microsoft uses a method they call “application pre-fetch” to improve performance of this critical period somewhat. App Pre-Fetch pays attention to the loading order of dlls by an applications during the first 10 seconds the process is running and writes the locations of these to a “.pf” file stored under a subfolder under the windows folder. This .pf file is automatically read by the system whenever a new process is spawned to run an exe, and in the background these dlls are queued to be read even before the exe gets around to reading them. This speeds up the application startup by eliminating the wait time that occurs without it. In the depiction below (illustrative, not actual measurements) you can see how, without App Pre-Fetch, these operations are serialized and take longer than when pre-fetch is used.

This pre-fetch only affects only dll file reading and not additional files, but you can measure the impact by locating and deleting the .pf file on a system and running the app.

Note that App-V does not prevent this pre-fetch from improving launch performance, however this effect is in place only for the second, and subsequent launches at the client.

App-V Stream Training

When we perform stream training activities inside the sequencer by launching the application in the Streaming Configuration phase of the sequencer, we are trying to achieve a similar effect for the first launch of the application. The difference here is that we consider ALL file I/O activity, including the exe file pages, dlls, and other asset files read in.

A big difference, however, is that the App-V design is that all of those files are read in first, and then the app is allowed to start consuming CPU.

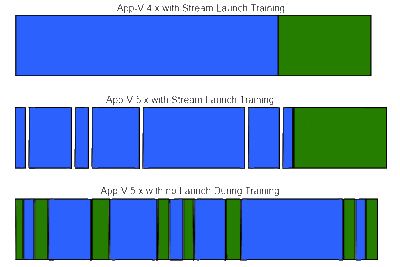

In App-V 4.x, these portions of the files are placed contiguously in the .SFT file and streamed using a single request from the client. Assuming the actual disk placement of the SFT file on the server is not fragmented, this produces an optimal stream transfer time to get the files into the local cache (and on first launch, as a byproduct of streaming, into memory). Once the pieces are in place the small startup CPU completes quickly.

In App-V 5.x, the “jigsaw file system” (SFT) is no longer used, and files are stored as complete files inside the App-V file unrelated to streaming training. Streaming training affects only an XML metadata file that dictates what portions are needed. The App-V client and drivers use this metadata to make multiple requests to stream over required content. While ensuring that the AppV file is not fragmented will speed up reading of a single file, it tends to not improve the multiple-file requests as much.

The depiction below (as before, this is illustrative and not taken from any real measurements) you can see how the launch performance with training could be slightly different.

In the App-V 5.x with Stream Launch Training example, the small gaps shown in file transfer represent a small delay that occurs as the client processes additional requests in the list. This delay is probably small enough that it shouldn’t be visible on a drawing of this scale, so I exaggerated it.

The case for not launching during training

In App-V 5, when Shared Content Store mode is in use, all launches appear similar to the “first launch” scenario.

While I do not have any test numbers to prove this, it is reasonable to assume that with App-V 5 In a scenario where Shared Content Store mode is in use performing a launch during training could actually slow down the launch by requiring that no CPU is expended until everything is in place. This is especially the case when the training portion becomes larger.

I expect that the difference in the amount of time before the application is ready for user interaction to be quite small. Without launch training, certainly the user would start to see some application UI elements earlier than when launch training is performed. And while I am not convinced it matters much whether you launch or not in this scenario, the point here is that it probably doesn’t affect performance much and you should probably stop performing the extra work of training the streaming.

But keep in mind that if you let the user see the streaming indication (a configuration option added back in during SP2 that adds a streaming percentage progress bar above the icon tray) then performing streaming training can be useful, not for performance but just to provide the user some feedback that something is happening prior to application GUI displays. Under different scenarios, such as an SCCM distribution using HTTP streaming off of the DP and without SCS mode enabled, this can be quite useful.

So I’m not going to answer the question “should you launch”? I don’t even touch some of the other considerations involved in this post. The answer can only be “it depends”. It is a complicated question that must take into consideration how you distribute, how you configure the clients, and whether or not clients can go offline. But please stop doing it because we told you to do it in App-V 4.x.